Behind the Scenes: Mapping Technical Features for a Mixed Reality Project

As I’ve written about previously, I’m currently working on my first mixed reality video game based on my own concept. It’s an exciting challenge but one of the issues at the outset of any new project is exploring technical feasibility. Particularly within a tight budget or restricted timeframe.

I’ve developed augmented reality applications previously but this my first project for the Meta Quest 3. Moreover my technical knowledge is not as sophisticated as that of our team’s software developer. So I devised a way of facilitating discussion during our first meeting around project’s concept and scope.

The Process

First, I brainstormed potential hand interactions. Things like, how the user might use their hands to interact with mixed reality content and navigate the space around them. You can read more about this initial ideation process on my other blog post here.

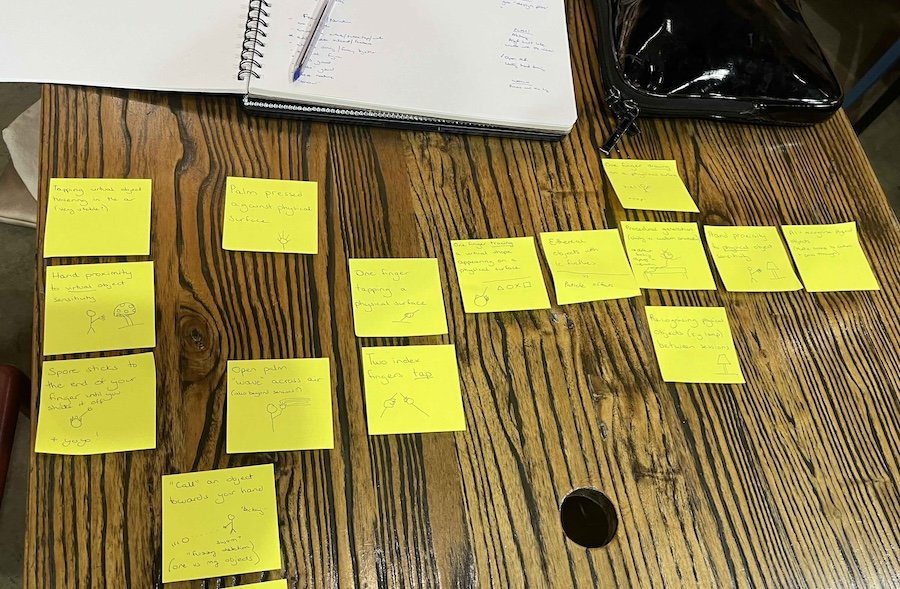

Next, I wrote or sketched these interactions on to individual post it notes.

When the Software Developer and I met, we went through each post it note. We discussed the type of interaction I envisioned and whether he thought it was feasible or not, based on his previous experience and knowledge of Unity and Meta out of the box SDKs. We also discussed how complex development might be.

As we talked, we placed each note on a simple continuum on the table - from easy/quick to hard/complex. We also had a separate area for “completely unknown”. I want to emphasise that at this point, we don’t always know how long each specific task could take. We just tried to guess relatively whether a task was going to take longer or be more complex than another.

The Goal

Our main goal was to sort all the tasks and create an initial work plan of where to start.

This is really important in deciding how we should prioritise our early-stage prototyping and subsequent play testing. It also provided my software developer with guidance when he started to look into the types of development tools frameworks plug-ins and SDKs that we would utilise for the overall project.

We are now at the stage of developing some simple interactions in Unity that we can playtest with sample users. From the feedback we hope to identify simple interactions that can form the basis for strong game mechanics and loops. More on the playtesting process in a future article!