Meta Connect 2025 and Takeaways for the XR Industry

Meta Announcement and XR

From the outset of the keynote, with CEO and Founder Mark Zuckerberg putting on a pair of Ray-Bans and walking to the stage to his opening line “AI glasses and virtual reality” – Meta’s annual developer conference confirmed the company’s current focus and one that has been in the making for over a decade.

It also demonstrated the company’s recognition of convergence of Extended Reality (XR) software and hardware with artificial intelligence.

Announcements

Meta Ray-Ban Display

Meta unveiled Meta Ray-Ban Display as its first pair of “AI smart glasses” with an integrated full colour display in the right lens.

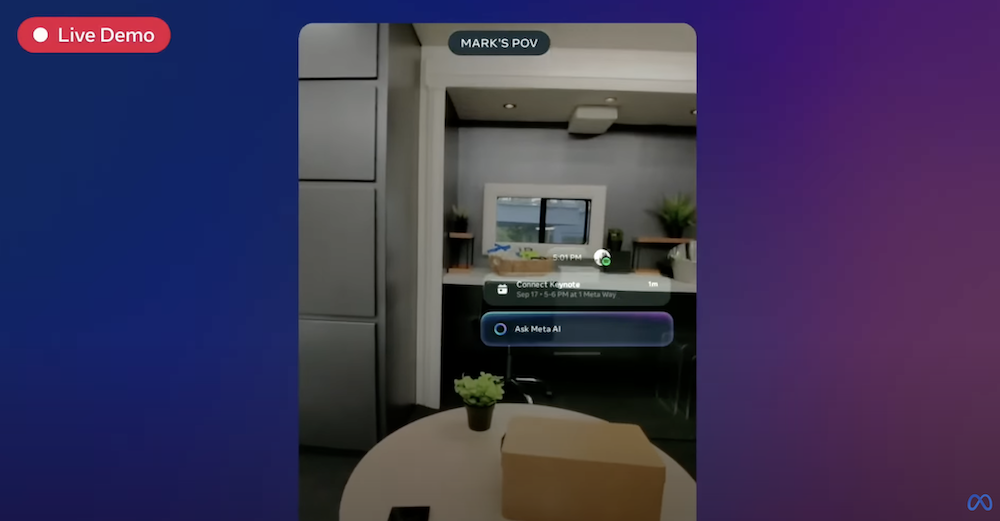

Mark’s live demo (which suffered from “wifi’” issues) included integrations accessing Meta AI, live calls, music, messaging and confirmation of voice commands. (If this tech feels familiar read on…)

The Meta Ray-Ban Display is the latest edition to their existing “smart glasses” range which include voice-activated controls to capture photos, control music or translate conversations in real time.

Meta Neural Band

Meta also introduced the Meta Neural Band - a wearable wristband with gesture interface based on hand and finger movement. This is a welcome addition providing users with a more subtle and private mode of input rather than just voice activation which in public settings is not always desirable or possible.

Oakley Meta Vanguard

Targeting high performance athletes and sporting enthusiasts, the Oakley Meta Vanguard taps into both the coveted Oakley and Garmin markets but also general sport enthusiasts. Meta AI works with Garmin smartwatches to capture and provide real time data on distance and speed. You can also capture and share video, audio and stats.

Learning from Google Glass

If the Meta Ray-Ban Display seems vaguely familiar you might be recalling Google’s infamous Glass project. Launched in 2013 it was a feat of engineering integrating a micro display and real time information but the general public were not as impressed. What has changed since then and why might Meta succeed this time round in convincing the public to wear computers on their faces?

We have evolved into online social creatures

A lot of the criticism Google Glass faced was around user experience which I wrote about back in 2017. In that article I discussed how a lot of the fallout actually stemmed from a disconnect between the primary and secondary users’ experience.

But the differences between Google Glass and Meta Glasses are stark, not just from 12 years of technical progress in form and function but also in the way that society and culture have also evolved.

In 2025 we are all chronically online. We consume large amounts of digital content designed specifically for our screens. Moreover, we have all become creators. Platforms have been built entirely on the premise of User Generated Content (think YouTube, TikTok) and add to that the power of social networks which have extended far beyond the people we actually know to celebrities, influencers and political figures.

We are all much more aware AND accepting that everyone and anyone may be capturing, recording, livestreaming and sharing in public spaces. But back in 2013 this was simply unheard of.

If you want mass adoption, you need mass appeal

The first point in Zukerberg’s presentation was: They need to be great glasses first.

Recognising the importance of social acceptance, Meta has strategically partnered with Essilor Luxottica, a company responsible for (and dominating) the design and production of ophthalmic lenses, equipment and instruments, prescription glasses and sunglasses. They own brands like Ray-Ban, Oakley, Oliver People and retailers like Sunglass Hut.

While Meta’s glasses are designed with fashion conscious consumers in mind, Google Glass appealed (unintentionally?) to tech enthusiasts with an almost cyberpunk aesthetic.

It’s also a strategic move, given that the people most likely to adopt this type of technology initially are those who ALREADY wear glasses. As someone who has worn prescription glasses since I was 14, I say “bring it on!”

Impact on XR industry

Meta are currently the leaders in the XR landscape pushing both virtual reality (Metaverse. Horizon Worlds), mixed reality (available via Quest 2 and 3 headsets) and augmented reality (via Instagram filters and Meta Spark Studio, although both now discontinued, one could argue that the Meta Ray-Bans are now picking up this baton).

How have this year’s Meta Connect announcements extended Meta’s lead and overall impact on the XR industry?

Terminology

Meta has positioned these latest products as AI glasses rather than AR glasses deliberately shying away (for now) from using words like augmented reality or mixed reality.

There are a number of strategic reasons for this approach. Currently the XR landscape suffers from a lack of consensus when it comes to terminology (XR, VR, AR, MR, spatial computing) making things incredibly confusing for consumers.

While technologists debate semantics and technical differences, the public are left scratching their heads as to what the technology can actually do and potential applications. Throw into the mix buzzy terms like “metaverse” and “web3” and no wonder there is confusion around XR tech.

Given the current interest in AI, calling these products “AI glasses” is at least (for the time being) something that the public can get their heads around.

AI is all about context

Meta isn’t jumping on the AI bandwagon because of the current stranglehold AI has on public consciousness. It’s a legitimate understanding of technology convergence.

XR, to date, has largely been about presenting content as an immersive experience.

From virtual environments and objects, spatial mapping of the user’s physical environment and spatial audio to a variety of inputs such as controllers, hand tracking, head tracking, gaze and speech.

What AI brings to the table is an understanding of what the user is doing in real time. For example, I could be

At the botanical gardens and ask “What is the name of this flower?”

Looking at a menu in a foreign language and ask “What does this sign say?”

Listening to music at my local café and ask “What’s this song?”

Yes, a lot of this technology already exists (Thanks Google Lens, Google Translate and Shazam) but wearing a pair of glasses with this capability removes so much of the current friction:

Taking out your phone

Unlocking it

Locating the right app

Opening that app

Navigating to the right screen

Inputing the request.

Viewing the response.

Look ma, no phone!

Well not just yet. These Meta glasses are not standalone products. They still require connection to a mobile phone and the Meta AI app.

But ultimately, I believe that Meta want to replace mobile phones entirely. In this way, they see Horizon OS as a direct competitor to Apple’s iOS and Google’s Android operating systems.

Meta wants to seamlessly integrate the offline and online worlds.

What does this mean for the future of narrative design?

Meta is determined to be at the forefront of this new spatial computing revolution with the Metaverse as the ultimate end goal. That is, a persistent and immersive online world that is experienced as a 3D spatial environment. But it’s still very early days.

The new Meta Ray-Ban Displays feature content as 2D objects – essentially flat dialog boxes.

The next evolution could include AR features such as face and surface detection which you may remember from Instagram and Facebook filters (which interestingly Meta discontinued at the beginning of 2025).

Eventually 3D objects will be positioned within “world space” so that they are anchored and persistent within the user’s physical environment.

Such 3D content would blend seamlessly with the real world. These types of glasses could then be called “mixed reality glasses” sharing a similar capability as Meta’s Quest 2 and 3 headsets. And that’s when things will get really interesting….

These glasses would be mobile in a way that isn’t yet practical with the Quest headsets (or Apple Vision Pro).

It would spear head location-based experiences from alternate reality games to retail engagement. The appearance of buildings, street corners, window shopfronts and even people could appear entirely different depending on the user. It could even resemble “Free City” from the Free Guy movie.

Pivotal step change

Meta Connect’s announcements demonstrate the company’s commitment and investment in extended reality technologies. Given the lack of any such announcements at Apple's WWDC 2025 event or Google’s minimal media around Project Astra leaves many pundits wondering when the sleeping giants will awaken…

You can watch Meta’s full keynote presentation below (event starts at 31:25)